Candidate evaluation methods that make hiring smarter in 2026

Remember the school science fair? The judges didn’t reward the tallest volcano, they used rubrics, measured creativity, method, clarity, and results. It felt fair, surfaced real talent, and the winners were those who held up under questions, not just glitter.

That’s the essence of smart candidate evaluation. Instead of chasing flashy résumés, you define what “great” looks like, test it consistently, and let evidence guide decisions. Structured interviews, work samples, and scorecards reduce bias and bring clarity. In this guide, we’ll help you apply that same rigor to hiring, so every decision feels fair, data-driven, and confidently built to last.

- Structured candidate evaluation replaces hunches with evidence, improving hiring accuracy.

- Standardized questions, rubrics, and work samples reduce bias and inconsistency.

- Behavioral and situational questions reveal judgment; technical tasks prove applied skills.

- Use hybrid individual scoring plus group debriefs for balanced, fair decisions.

- Hummer AI standardizes scorecards, automates assessments, and speeds evidence-first debriefs.

What is candidate evaluation in the hiring process?

Candidate evaluation is like QA testing before a product release, you define acceptance criteria, run consistent checks, and ship only what meets the bar. In hiring, that mindset sets the stage for fair decisions, clear trade-offs, and fewer surprises, so every interview produces evidence you can trust. It creates shared language between recruiters and hiring managers.

Candidate evaluation is the structured way to judge fit, skills, and potential against job-ready criteria. You turn requirements into a scorecard, run the same checks for every applicant, and record evidence. The outcome is apples-to-apples comparisons instead of gut calls.

Methods include structured interviews, job-relevant work samples, skills assessments, and reference checks mapped to competencies. Standardized scoring reduces bias and noise, improves selection ratio, and raises the quality of hire. Rubrics accelerate debriefs, so hiring managers decide faster with less back-and-forth.

Unlike ad-hoc interviews, a documented process protects candidate experience. Candidates know what’s assessed, when, and how feedback flows. Consistency feels fair, signals professionalism, and keeps interview fatigue low, helping you attract talent even when your brand or benefits aren’t the loudest.

Finally, evaluation creates a data trail you can audit and improve. Score distributions highlight noisy questions, work-sample outcomes predict success. Over time, you’ll lift the quality of hire, trim time to fill, and tighten interview-to-hire ratio, winning your leadership team's notice.

Why candidate evaluation is essential for better hiring decisions

Smart hiring is like shipping a stable release: define tests, run them consistently, and only push what passes. Candidate evaluation turns that mindset into action, so interviews produce evidence, not hunches, and decisions balance speed, fairness, quality of hire, selection ratio, and long-term impact without derailing your roadmap today and tomorrow.

Candidate evaluation ensures hiring decisions are based on evidence, not instinct.

By using structured scorecards, consistent rubrics, and work samples, organizations reduce bias, improve quality of hire, speed up decisions, and build a fair, data-driven process that reliably identifies top talent.

- Cuts bias and noise: Structured scorecards and consistent interview questions reduce drift, confirmation bias, and halo effects. Candidates are judged against the same criteria, improving fairness and candidate experience while protecting diversity goals and legal defensibility across markets.

- Improves signal to noise: Job-relevant work samples and skills assessments surface ability early. Weighted scorecards prevent weak signals from dominating debriefs, raising selection ratio and quality of hire while steadily trimming time to fill for truly critical roles.

- Reduces cost per hire: Fewer loops, clearer criteria, and faster rejections cut wasted hours. By prioritizing high-probability candidates through structured evaluation, sourcing spend drops and hiring manager time improves. Budgets stretch further without hurting candidate experience or offer acceptance rate.

- Speeds time to fill: Calibrated rubrics align interviewers on day one. With evidence logged consistently, debriefs shrink and decisions accelerate, especially for high-volume roles. Qualified applicants move quickly through the funnel, improving applicant-to-hire ratio and reducing competing offers.

- Enhances candidate experience: Clear instructions, predictable stages, and job-relevant tasks show respect. Candidates know what’s measured and why, receive timely updates, and face fewer hoops. Transparency boosts brand perception and increases offer acceptance, even when compensation isn’t the market leader.

- Improves quality of hire: Competencies tied to outcomes link interviews to performance. You test must-have skills, values alignment, and learning speed with structured prompts. Strong signals cluster across interviewers, reducing false positives and elevating future high performers teams actually keep.

- Enables continuous improvement: Score distributions and question-level analytics reveal noisy interviewers and weak prompts. You retire low-signal tasks, upgrade work samples, and retrain panels. Over time, interview-to-hire ratio stabilizes, adverse impact shrinks, and selection accuracy climbs across job families.

- Strengthens compliance and fairness: A documented process, anchored in job analysis and consistent scoring, shows fairness. It helps defend decisions, meet audit needs, and align with regional rules while still allowing structured flexibility for senior roles and specialized hiring.

Knowing why candidate evaluation matters is only half the story, the real impact comes from how you apply it. Next, let’s explore the core principles that make every evaluation fair, consistent, and predictive of long-term success.

Core principles of fair and consistent candidate evaluation

Fair candidate evaluation is like a calibrated scale in daily standups: everyone weighs the same, and outliers raise discussion. When your process uses shared criteria, structured interviews, skills assessments, and job-relevant work samples, hiring signals get cleaner. Here’s how to build consistency without slowing decisions or dampening candidate experience with scorecards.

- Anchor the role with job analysis: Define must-have competencies, level expectations, and success outcomes. Translate them into clear interview questions, work samples, and rating guides. This keeps evaluations job-related, boosts selection ratio, and improves legal defensibility without adding bureaucracy.

- Use structured interviews consistently: Ask the same job-relevant questions, in the same sequence, across candidates. Provide behavioral anchors and scoring rubrics. Consistency cuts bias and noise, strengthens candidate experience, and raises quality of hire without slowing teams significantly.

- Score with weighted criteria: Convert competencies into numeric scales and log evidence, not impressions, for each rating. Weighted scorecards keep must-haves from being diluted by charm, improving selection ratio and decision clarity. Calibration improves time to fill and reduces back-and-forth.

- Prefer work samples and skills assessments: Simulate real tasks with right-sized scope. Evaluate clarity, problem solving, and output quality using the same rubric. These tests predict performance better than brainteasers, raise quality of hire, and give candidates a fair experience.

- Calibrate interviewers as a panel: Align on what “meets bar” means using examples and red flags. Run shadowing and periodic calibration. Calibration cuts rating drift, reduces false positives, and keeps candidate evaluation consistent across locations, teams, and stages.

- Run evidence-first debriefs: Start with independent scores, then discuss evidence tied to competencies. Avoid anchoring by withholding overall votes until the end. This speeds decisions, keeps the process fair, and documents reasoning for compliance, audits, and future quality-of-hire analysis.

- Track funnel metrics, not feelings: Monitor time to fill, interview-to-hire ratio, applicant-to-hire ratio, and offer acceptance rate. Tie to evaluation stages to spot drop-offs. Replace debates with numbers, then iterate rubrics and training to improve selection accuracy and throughput.

- Design for candidate experience and fairness: Share stages, timelines, and expectations early. Use job-relevant tasks, accessible formats, and reasonable prep. A respectful process widens candidate diversity, builds trust, and keeps your brand competitive even when benefits aren’t the highest.

Candidate evaluation vs candidate assessment and why the difference matters

Choosing between evaluation and assessment is like prepping for a marathon versus the final race: one builds readiness, the other proves ability. In hiring, knowing the difference avoids wasted loops, ensures structured candidate evaluation, and creates fair candidate evaluation outcomes that help teams hire faster and smarter.

What are the key candidate evaluation categories and areas to focus?

Designing a hiring rubric is like planning a sprint backlog: prioritize high-impact stories, define acceptance criteria, and align the team early. These categories steer interview evaluation methods toward reliable signals. They reduce bias in candidate evaluation across teams, roles, and locations, keeping decisions fast, consistent, and defensible everywhere over time.

- Role outcomes and competencies: Start with success criteria and level benchmarks. Map each competency to behavioral indicators and interview questions. Clear definitions keep panels aligned, reduce debate during debriefs, and make trade-offs visible when signals conflict across interviews.

- Skills and domain knowledge: Use job-relevant work samples, code or writing tests, and structured troubleshooting prompts. These interview evaluation methods reveal depth and provide comparable evidence for debriefs, even when candidates come from different industries or nontraditional backgrounds.

- Problem solving and judgment: Present ambiguous scenarios with constraints and trade-offs. Ask candidates to narrate choices, risks, and fallback plans. Structured reasoning and practical judgment consistently predict ramp speed in practice better than memorized frameworks or polished, rehearsed stories.

- Communication and collaboration: Evaluate clarity, listening, and stakeholder alignment using role-play or artifact walkthroughs. Probe how candidates seek feedback and resolve conflict. Strong communication reduces rework, speeds decisions, and improves handoffs, particularly in distributed teams and cross-cultural environments.

- Execution and ownership: Use timelines, blockers, and prioritization prompts. Ask for examples showing grit, follow-through, and trade-offs when resources shrink. Evidence of reliable delivery correlates with quality of hire, preventing pleasant interviews masking weak, inconsistent execution in real projects.

- Learning agility and growth: Test how candidates approach unfamiliar tools, new domains, and feedback. Look for curiosity, iteration speed, and self-correction. Learning velocity keeps teams adaptable and predicts long-term potential better than static experience or brand-name employers alone.

- Values alignment and ethics: Use realistic dilemmas that surface judgment under pressure. Probe for integrity, inclusion, and willingness to challenge harmful shortcuts. Shared values reduce mis-hires, protect culture, and ensure decisions hold up when incentives conflict or deadlines squeeze teams.

- Process consistency and fairness: Standardize stages, question banks, and scoring rubrics. Train panels to avoid common traps and calibrate regularly. This operational discipline enables fair candidate evaluation, reducing bias in candidate evaluation and improving applicant-to-hire ratio without adding unnecessary loops.

- Evidence and documentation: Capture notes tied to competencies, not anecdotes. Require a candidate evaluation form with ratings and risks. Strong documentation accelerates debriefs, supports audits, and significantly improves interview evaluation methods with clearer, consistently structured training data over time.

How to evaluate candidates effectively in 2026: step-by-step guide

Evaluating candidates is like running a release train small, repeatable steps ship reliable builds on time. When hiring sprints follow a checklist, signals sharpen and bias drops. In 2025, use this step-by-step play to turn interviews into consistent evidence, guiding fair candidate evaluation. See where candidate assessment fits, and how to run it.

Evaluating candidates effectively in 2026 means using structured interviews, calibrated scorecards, and evidence-first debriefs.

By standardizing questions, adding job-relevant work samples, and tracking outcomes, hiring teams reduce bias, improve accuracy, and make faster, fairer, data-driven hiring decisions across roles and locations.

- Define success and must-have competencies: Write role outcomes, levels, and behaviors. Convert them into measurable criteria and acceptance tests. This anchors structured candidate evaluation and keeps interview evaluation methods job-relevant, reducing bias in candidate evaluation from the very first conversation.

- Pick candidate assessment and evidence plan: Decide where skills tests, work samples, and take-homes add signal. Set time limits and scoring guides. Keep tasks job-relevant and accessible to support fair candidate evaluation and avoid hoops inflating drop-offs in candidate recruitment.

- Build a candidate evaluation template and form: List competencies with 1–5 scales, definitions, and red-flag notes. Require evidence under each rating. Templates standardize panel notes, make signals comparable, and provide evaluation help for faster debriefs and better audit trails.

- Calibrate the panel before interviews: Share examples for “meets bar,” practice scoring the same sample answer, and align follow-ups. This trims rating drift, reduces bias in candidate evaluation, and helps interviewers apply interview evaluation methods consistently across locations and roles.

- Run a structured screen first: Use a short, consistent phone or video screen to confirm basics, motivation, and required constraints. Document outcomes in the candidate evaluation form. Early structure preserves time and improves fairness without blocking strong, nontraditional profiles.

- Assign a job-relevant work sample: Scope a realistic task with clear inputs, outputs, and evaluation criteria. Provide time guidance and rubrics. Work samples add objective signals, support fair candidate evaluation, and predict quality of hire better than conversational interviews alone.

- Run structured interviews by competency: Ask the same questions in the same order, with behavioral anchors and scoring notes. Probe decisions and trade-offs. This keeps interviews job-relevant, improves comparability, and strengthens structured candidate evaluation consistently across multiple interviewers.

- Score with weights and evidence: Rate each competency independently, attach notes, and calculate a weighted summary. Keep overall votes private until evidence is shared. Weights stop charisma from dominating, improving selection accuracy and reducing bias in candidate evaluation.

- Debrief evidence-first, fast: Start with independent scores, then discuss deltas tied to the rubric and candidate assessment results. Decide hire or no-hire with explicit trade-offs. This speeds decisions, improves fairness, and produces a clear narrative for quality-of-hire checks.

- Close the loop and improve: Track time to fill, interview-to-hire ratio, and offer acceptance rate by stage. Review funnel health and bias audits. Use learnings, then update the candidate evaluation template and retrain panels regularly based on outcomes.

Does candidate evaluation differentiate between generations?

Evaluating by generation is like assigning project roles by coffee preference: catchy label, zero proof. In practice, performance comes from fit, training, and course conditions. That’s why modern processes emphasize job-relevant signals and consistent rules, not birth-year assumptions, to keep decisions fair, fast, defensible, and compliant. Focus on competencies, not cohorts.

- Start with job analysis, not age labels: Define outcomes and competencies, then apply structured candidate evaluation to everyone. Role-relevant rubrics drive fair candidate evaluation and reduce bias, avoiding stereotypes while improving selection accuracy and interview evaluation methods across teams.

- Validate assessments for fairness: Choose candidate assessment tools validated for the role and monitor age-based adverse impact. Run audits, rotate question banks, and document cut scores. This keeps fair candidate evaluation defensible and reduces bias without diluting predictive power.

- Standardize interviews and language: Ask the same job-relevant questions, use scoring guides, and avoid generational shorthand. Offer accessible formats and accommodations. Consistency strengthens interview evaluation methods, supports structured candidate evaluation, and reduces bias while keeping conversations humane and efficient.

- Calibrate panels and blind early screens: Train interviewers together, sample-score artifacts, and strip age proxies from resumes where lawful. Independent scoring before discussion tames anchoring. These practices enable fair candidate evaluation and reduce bias across sites and seniority levels.

- Offer equivalent ways to show skill: Provide portfolios, code tests, or case walkthroughs covering the same competencies. Keep time, scope, and scoring identical. Flex channels, video or written, without changing task difficulty, preserving fairness while widening candidate recruitment reach.

- Measure outcomes by age cohort without using age in decisions: Track pass rates, interview-to-hire ratio, and offer acceptance rate. Investigate gaps, then update rubrics or tasks. This continuous loop sustains fair candidate evaluation and compliance readiness.

- Document and communicate consistently: Use a candidate evaluation form that captures evidence, criteria, and next steps. Share timelines and expectations upfront. Clear documentation deters subjective detours, supports audits, and strengthens trust across generations without changing the bar for anyone.

Tips for evaluating interview candidates effectively

Evaluating interview candidates is like a code review before a major release, you compare work to standards, debate trade-offs, and log decisions for the repo. Apply the same rigor to your candidate evaluation process. Use these moves to boost signal, cut bias, and reach confident, fast decisions, without burning interviewers or candidates.

- Define outcomes and criteria upfront: Translate role goals into candidate evaluation criteria with behavioral anchors and acceptance tests. Share them before interviews, so every question maps to the bar, avoids improvisation, and reinforces candidate evaluation best practices across teams.

- Standardize interviews for comparability: Ask the same job-relevant questions in the same order, with scoring guides. This structure limits bias, improves note quality, and turns impressions into data your candidate evaluation process can compare across interviewers and markets.

- Add a job-relevant work sample: Use a realistic task with clear inputs, outputs, and a rubric. This candidate assessment boosts signal early, reduces talk bias, and lets diverse backgrounds demonstrate ability against the same bar, improving fairness and speed.

- Score with weights and evidence: Rate each competency independently, attach notes, and calculate a weighted summary. Weights stop charisma from dominating and keep must-haves central, turning interviews into comparable data your candidate evaluation process can defend during audits and debriefs.

- Debrief evidence-first, not opinion-first: Start with independent scores, then discuss deltas tied to the rubric and artifacts. Delay overall votes until after evidence. This avoids anchoring, speeds decisions, and keeps outcomes aligned with candidate evaluation criteria, not the loudest voice.

- Calibrate panels and audit bias: Shadow interviews, sample-score artifacts, review score distributions by interviewer. Coach outliers and refresh rubrics quarterly. These habits embed candidate evaluation best practices and reduce drift, improving fairness and accuracy without extra loops or slower hiring.

- Document decisions and communicate clearly: Use a simple template to capture ratings, risks, and rationale. Share timelines and expectations early. Transparent updates turn interviews into a respectful experience for candidates and preserve trust even when outcomes are no-hire decisions.

- Measure the funnel and iterate quarterly: Track time to fill, interview-to-hire ratio, and offer acceptance by stage. Use insights to update questions, weights, and training. This keeps candidate evaluation criteria current and anchors continuous improvement in observed outcomes, not assumptions.

Using behavioral and situational questions to measure potential

Using behavioral and situational questions is like running fire drills for big launches: safe rehearsals that reveal reflexes, judgment, and calm under pressure. When you simulate reality, the signal improves fast. That creates a structured candidate evaluation where potential shines beyond rehearsed stories and stays consistent across teams and markets globally.

- Pair behavioral and situational deliberately: Use behavior questions for past actions, use situational prompts to test judgment on new problems. This mix strengthens interview evaluation methods and keeps your candidate evaluation process focused on job reality, not storytelling polish under timed constraints with clear scoring.

- Map to competencies and outcomes: Tie each question to specific competencies and success outcomes, with acceptance thresholds clearly. Writing explicit candidate evaluation criteria enables fair candidate evaluation, panel calibration, and quick debriefs because everyone scored the same behavior against the same bar consistently.

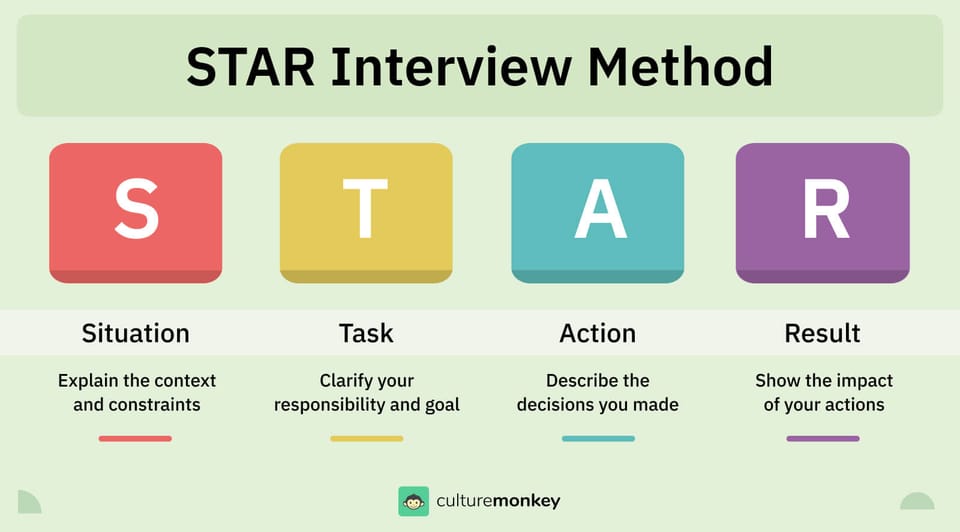

- Probe with STAR, then drill down: Ask for Situation, Task, Action, Result, then request metrics, trade-offs, and concrete lessons. This structure powers structured candidate evaluation and pairs well with a candidate assessment, revealing depth beyond rehearsed stories and protecting against vague, high-polish answers clearly.

- Write realistic scenarios with anchored scores: Use dilemmas pulled from the role’s constraints and customers. Publish candidate evaluation criteria and risk notes for each outcome. Anchored rubrics enable fair candidate evaluation, surface judgment quickly, and keep interviewers from rewarding confident storytelling over thoughtful trade-offs.

- Normalize follow-ups and challenge assumptions: Tell interviewers to press for specifics, constraints, and alternatives. Standard follow-ups reduce halo effects and improve comparability. They reveal thinking under stress, reducing bias in candidate evaluation while giving candidates space to explain context without penalty when stakes feel high.

- Offer equivalent, accessible ways to show skill: Allow written, whiteboard, or code editor responses for the same competency, under identical timing and scoring. Equivalent formats maintain fairness, widen pipeline, and keep the bar steady while delivering a fair candidate evaluation that respects constraints candidates cannot control.

- Score independently, then debrief evidence-first: Enter ratings and notes before discussion to avoid anchoring. In debriefs, compare evidence to the rubric and candidate assessment results. This habit hardens your candidate evaluation process and reflects candidate evaluation best practices that scale across roles and regions.

- Close the loop with outcomes data: Track interview-to-hire ratio, pass rates by stage, and quality of hire. Compare patterns to question banks and scenario types, then update rubrics quarterly. Data-led tweaks strengthen fairness, reduce bias in candidate evaluation, and lift accuracy without adding interview loops.

Evaluating technical skills and role-specific expertise effectively

Evaluating technical skill is like using a flight simulator before a real takeoff, safe, repeatable, and brutally honest. You define candidate evaluation criteria, run the same maneuvers, and compare telemetry. That structure turns interviews into evidence, balances candidate assessment with reality, and protects fairness without slowing your candidate evaluation process.

- Map the stack to outcomes: List languages, tools, and environments, then tie each to concrete job outcomes. Document acceptance thresholds in a candidate evaluation template. Clear mapping stabilizes structured candidate evaluation and keeps interview evaluation methods job-relevant across teams and time in busy hiring cycles.

- Use realistic work samples: Design a scoped task with real inputs and a scored rubric. Limit time, publish expectations, and predefine pass levels. This candidate assessment yields comparable evidence, supports fair candidate evaluation, and reveals depth beyond rehearsed answers or platform familiarity.

- Run structured technical interviews: Ask the same competency-based questions in the same order, with scoring anchors. Probe design choices, trade-offs, and debugging steps. Consistency reduces noise, enables structured candidate evaluation, and makes evidence comparable across interviewers and regions over different time zones and cycles.

- Balance difficulty and relevance: Avoid puzzles, test applied skill at the role’s level. Pilot exercises with current team members, record completion times, and tune rubrics. Right-sized tasks maximize signal, reduce fatigue, and improve the candidate evaluation process without inflating drop-offs or unnecessary delays.

- Score with weighted criteria: Rate each competency independently, attach notes, and compute a weighted summary. Prioritize must-haves, cap nice-to-haves. Weighting blocks charisma from overwhelming data and strengthens fair candidate evaluation during debriefs, audits, and multi-location hiring while clarifying trade-offs for final decisions.

- Observe debugging and trade-offs: Ask candidates to vocalize hypotheses, log-checks, and rollback plans. Evaluate how they choose between speed, safety, and simplicity. This reveals judgment under constraint and gives interview evaluation methods stronger, comparable evidence than polished, memorized narratives in high-pressure scenarios.

- Ensure accessibility and fairness: Offer equivalent formats, live, written, or offline, without changing difficulty or scoring. Publish prep materials and time windows. These practices support fair candidate evaluation and reduce bias in candidate evaluation, especially across regions, bandwidth limits, and caregiving constraints that applicants face daily.

- Link to outcomes and iterate: Compare interview performance with ramp speed, defect rates, and peer feedback at 90 days. Adjust questions, weights, and tasks quarterly. This keeps your candidate evaluation criteria current and your candidate evaluation process predictive, fair, and audit-ready for leadership reporting.

Group vs. individual evaluations: which approach works better?

Candidate evaluation matrix: what it is and how to create one

Building a candidate evaluation matrix is like setting guardrails on a highway: you choose lanes, speeds, and exits before traffic flows. That clarity turns noisy interviews into comparable signals. Use it to align panels, shorten post-interview evaluation, and turn opinions into traceable, auditable decisions during rapid growth and high-volume hiring cycles.

- Define columns and rows: Columns list competencies, weights, and acceptance thresholds, rows list candidates and evidence. Draft candidate evaluation questions per competency, so every cell captures behavior, outcome, and proof rather than impressions. Keep scales consistent across interviewers.

- Choose scoring scales: Use 1–5 with anchors for each competency and red flags. Define must-have pass marks and overall weights. This converts mixed notes into comparable numbers without losing nuance or context across stages and interviewers and locations.

- Attach evidence rules: Require links, quotes, or artifacts for every rating. For each box, specify what good looks like and common pitfalls. Evidence-first notes speed debriefs and strengthen fairness during audits and post-interview evaluation reviews and hiring board discussions.

- Integrate candidate assessment: Add work-sample and test results as dedicated columns with rubrics and cut scores. Publish timing, accessibility, and anti-cheating rules. This keeps tasks job-relevant, fair, and predictive while minimizing fatigue and inflated drop-offs across roles and regions.

- Calibrate before use: Sample-score a candidate evaluation sample together, compare deltas, and refine anchors. Practice with mock profiles until variance narrows. Shared calibration reduces bias, improves speed, and raises confidence in the matrix before real decisions under time pressure.

- Run independent scoring: Interviewers enter ratings and notes before debriefs. Hide overall votes until evidence is shared. This sequence reduces anchoring, improves fairness, and lets the matrix surface trade-offs clearly during a concise, decision-focused discussion with clear ownership.

- Tie to outcomes: Compare matrix scores with ramp speed, peer feedback, and retention at 90 days. Update weights, questions, and rubrics quarterly. Iteration keeps the matrix predictive, fair, and aligned with business reality as roles evolve and markets change.

- Embed templates and forms: Host a recruiter evaluation form and matrix as living documents in your ATS. Provide checklists, examples, and guidelines. Standard templates accelerate training and keep post-interview evaluation consistent across teams, locations, and role levels over time.

Red flags to look for during candidate evaluation

Spotting red flags is like reviewing test logs after a failed deployment, patterns expose root causes fast. Lean on a disciplined candidate evaluation process to turn impressions into evidence, then compare behaviors to candidate evaluation criteria. The cues below help teams follow candidate evaluation best practices without slowing decisions today.

- Vague, story-only answers: Lots of flair, few specifics, and no metrics signal weak evidence. Ask for numbers, constraints, and outcomes, log details in the candidate evaluation form so debriefs compare fairly against candidate evaluation criteria. That’s candidate evaluation best practices.

- Blame without ownership: Finger-pointing, vague attribution, and zero self-reflection suggest risk under pressure. Probe for personal decisions, trade-offs, and lessons. If they minimize mistakes, score low against candidate evaluation criteria and record examples in the candidate evaluation process for consistency.

- Ethical gray zones: Casual shortcuts with data privacy, accessibility, or compliance are non-negotiable red flags. Note specifics and ask situational follow-ups. Candidate assessment scores cannot offset ethics risks, document in the candidate evaluation form and escalate under candidate evaluation practices.

- Inconsistent evidence vs résumé claims: Gaps between résumés and work samples show signal inflation. Ask for artifacts, dates, and roles. If answers stay fuzzy, score competency low under candidate evaluation criteria and note discrepancies in the candidate evaluation process.

- Defensive with feedback: Dismissive reactions to coaching signal poor learning speed. Offer a small suggestion and watch adjustment. Resistance across prompts indicates risk, reflect it in scores and notes in the candidate evaluation form to keep debriefs grounded and comparable.Task avoidance or generic AI output: Declining realistic tasks or submitting generic AI text reduces confidence. Publish expectations and rules early. Score the work, not the tool, poor alignment to candidate evaluation criteria is the red flag, not transparent assistance.

- Poor listening and meandering answers: Missing the question, interrupting, or rambling blocks signal. Use structured prompts and timeboxes, ask for summaries. Persistent drift lowers communication scores under candidate evaluation criteria, documented in your candidate evaluation process for panel alignment.

- Compensation-only focus, no role curiosity: Early, relentless pay talk without role discovery suggests misfit. Explore motivation, team context, and learning goals. If curiosity never appears, document concerns and lower engagement scores, the candidate evaluation process relies on interest for success.

Reducing bias and improving fairness in candidate evaluation

Reducing bias is like adding guardrails to your code review pipeline: standards catch drift before it ships. In hiring, the same guardrails, clear criteria, structured steps, and shared notes, keep choices clean and fair at scale. Use the workflow below to turn good intent into repeatable fairness and measurable, fair candidate evaluation.

- Standardize questions and scoring: Use structured interviews with shared prompts, follow-ups, and anchored ratings for all candidates. This reduces noise and halo effects, reducing bias in candidate evaluation and keeping evidence comparable across locations within one consistent candidate evaluation process.

- Calibrate interviewers regularly: Sample-score the same answer, align on what “meets bar” looks like, and refresh examples quarterly. Calibration keeps ratings tight, applies candidate evaluation criteria consistently, and reduces variance that skews decisions toward confident storytellers rather than demonstrated skill.

- Blind early signals where lawful: Remove age proxies, school names, and identifiers during first screens where law permits. This backs candidate evaluation best practices and keeps interview evaluation methods focused on job evidence rather than pedigree or polished résumés.

- Use consistent work samples: Assign realistic tasks with identical inputs, timing, and scoring rubrics across candidates. Publishing expectations and pass levels builds trust, reduces second-guessing, and standardizes evidence inside the candidate evaluation process across locations, bandwidth constraints, and interviewer styles.

- Separate assessment from evaluation: Run candidate assessment to test skills objectively, then evaluate fit using candidate evaluation criteria. Treating them as distinct signals improves accuracy, reduces charisma effects, and helps panels explain trade-offs cleanly during evidence-first debriefs with decision ownership.

- Measure outcomes and adjust: Track pass rates, interview-to-hire ratio, and offer acceptance, then slice by cohort to spot gaps. Update questions, weights, or training, reducing bias in candidate evaluation and keeping your candidate evaluation process fair, current, and explainable.

How strong candidate evaluation improves retention and long-term performance

Strong candidate evaluation is like refining your customer onboarding funnel, fix friction upfront, and churn drops later. When teams hire against clear candidate evaluation criteria, ramp time shrinks, managers coach better, and engagement compounds. Here’s how disciplined signals in the candidate evaluation process translate into retention and long-term performance consistently.

Strong candidate evaluation links hiring decisions to long-term success by matching skills, values, and growth potential early.

Structured rubrics and evidence-first debriefs improve fit, speed ramp-up, boost retention, and ensure every new hire performs consistently and thrives within company culture.

- Better role–person fit: Clear candidate evaluation criteria filter for must-have behaviors and skills. That front-loads fit, reduces early exits, and accelerates contribution, lifting quality of hire and first-year retention while keeping interview loops short and consistent across teams.

- Faster ramp and productivity: Structured candidate evaluation targets learning agility and execution. Managers receive evidence to tailor onboarding. Result, fewer false starts, quicker milestones, steadier performance curves, compounding retention by reducing early frustration in the first ninety days.

- Stronger manager-employee match: Evidence-first debriefs align expectations before day one. When trade-offs are explicit, managers coach to real gaps, not assumptions. That alignment decreases friction, increases psychological safety, and improves long-term performance reviews and growth conversations measurably over time.

- Lower attrition risk signals: The candidate evaluation process captures concerns early, motivation, constraints, and context. Documented risks inform offers, role shaping, or honest declines. Addressed upfront, these signals reduce regretted attrition and rehiring costs without lengthening cycles or harming candidate experience.

- Culture and values durability: Situational questions and reference checks test ethics and collaboration. Hiring to shared standards creates steadier teamwork and fewer toxic surprises, boosting engagement and multi-year retention through clearer norms and consistent, fair candidate evaluation company-wide.

- Better coaching data: Candidate evaluation criteria produce specific notes on strengths and gaps. Managers convert them into 30-60-90 plans and targeted support. Clear starting points accelerate mastery, improve peer feedback, and increase long-term performance without guessing what to teach first.

- Closed-loop improvement: Track quality of hire, time to productivity, and retention by stage. Feed outcomes back into questions, weights, and training. The candidate evaluation process gets sharper each quarter, reducing mis-hires and lifting long-term performance across roles and regions.

When strong evaluation practices are in place, hiring doesn’t just end with the right offer, it sets the stage for lasting performance. The next step? Ensuring hiring managers know exactly where to focus during evaluations to keep that consistency and quality intact.

What hiring managers should focus on during evaluations

Great evaluations are like flight checklists before takeoff, short, repeatable, impossible to skip. As a hiring manager, your job isn’t to be dazzled, it’s to gather clean signals. Anchor on outcomes, test consistently, and record evidence that travels across panels and informs onboarding, coaching, and decisions company-wide later, too, with measurable impact.

- Define outcomes and must-haves: Write the role’s top outcomes, then translate them into candidate evaluation criteria with clear behavioral anchors and acceptance tests. Share this bar before interviews so every question maps to it, limiting improvisation and keeping panels aligned during fast debriefs.

- Plan evidence and assessments: Decide which candidate assessment adds real signal, work sample, coding task, case, or writing sample, and publish timing, scope, and scoring rules. Keep tasks job-relevant and accessible to support fairness, widen the pipeline, and reduce drop-offs without adding loops or delays.

- Standardize interviews and follow-ups: Ask the same job-relevant questions in the same order, include mandated follow-ups that probe constraints, trade-offs, and results. Consistency turns impressions into comparable data and strengthens your candidate evaluation process across locations, levels, and interviewer styles over time, too.

- Score with weights and evidence: Rate each competency independently, attach concrete notes or artifacts, and calculate a weighted summary so must-haves dominate over charm. Hide overall votes until evidence is shared to avoid anchoring and keep debates short, focused, and fair for everyone.

- Calibrate panels, coach outliers: Sample-score the same recorded answer, compare deltas, and align on what “meets bar” looks like for each competency and level. Review distributions quarterly, give feedback to high-variance interviewers and refresh rubrics. Calibration improves fairness, speed, and decision consistency.

- Debrief evidence-first, fast: Start with independent scores, then discuss mismatches tied to the rubric and candidate assessment results. Decide hire or no-hire with explicit trade-offs, capture rationale in the candidate evaluation template to keep audits and future coaching simple and searchable later.

- Communicate clearly, respect time: Share stages, timelines, prep guides, and accessibility options upfront, with contacts for help. Transparent updates follow candidate evaluation best practices, strengthen trust, and raise completion rates without extending cycles or lowering the bar or confusing candidates in flight.

- Measure outcomes, iterate quarterly: Track time to fill, interview-to-hire ratio, pass rates by stage, and offer acceptance. Compare patterns to question banks and weights, update candidate evaluation criteria and training based on results, not hunches. Close loops and publish improvements company-wide quarterly.

Best candidate evaluation questions to ask after interviews

Post-interview debriefs are like post-mortems after a launch: you gather logs, isolate root causes, and decide what to fix next. Treat the candidate evaluation process the same way. Ask targeted follow-ups that turn opinions into evidence, align with candidate evaluation criteria, and expose signals you can trust.

- Evidence of outcomes: What results did the candidate deliver in similar contexts? Capture metrics, scope, and constraints. Do those outcomes map to our must-have competencies and the role’s first-90-day goals? Note examples linking results to repeatable behaviors, not lucky breaks. Look for patterns.

- Decision quality under constraints: When choices were messy, which trade-offs did the candidate pick, and why? Ask for risks considered and mitigations used. Did their judgment balance speed, safety, and scope? Probe alternatives they rejected. Record concrete signals against your rubric, not impressions.

- Learning velocity: How did the candidate upskill for a new domain or tool quickly? Ask for the resource path, checkpoints, and measurable gains. Did they adapt based on feedback? Look for iteration speed and self-correction. Evidence here predicts ramp time reliably across roles.

- Collaboration and conflict: Describe a tough stakeholder disagreement. What changed minds? What stayed blocked, and why? Ask for tactics, timelines, and outcomes. You want respectful pushback, clear alignment moves, and recovery plans. Score listening, clarity, and follow-through using anchored candidate evaluation criteria.

- Execution and ownership: When plans slipped, what did the candidate do next? Ask for prioritization choices, trade-offs, and risk calls. Did they surface blockers early and land a minimum viable outcome? Look for dependable delivery patterns under pressure, not heroic last-minute rescues.

- Ethics and judgment: Share a realistic dilemma tied to privacy, accessibility, or compliance. What would they do, step by step, and why? Ask for stakeholders informed and safeguards used. You’re testing values in action, not slogans. Document specifics against non-negotiable standards.

- Communication clarity: After a complex question, ask the candidate to summarize the problem, decision, and result in thirty seconds. Then request the same for a non-expert audience. You’re measuring structure, plain language, and audience awareness. Notes should include quotes, not paraphrases.

- Role fit and motivation: Why this role, now? What trade-offs would they accept for impact? Ask for learning goals and conditions where they thrive. Look for intrinsic drivers, not only compensation levers. Map motivations to team reality to reduce early attrition risk.

How Hummer AI supports hiring teams with smarter candidate evaluation

Using Hummer AI is like upgrading from manual spreadsheets to an autopilot checklist in busy hiring sprints. It standardizes questions, captures evidence, and flags gaps before debriefs. With built-in scorecards, work-sample rubrics, and analytics, hiring teams move faster while improving fairness and signal across roles. Managers coach sooner with data they can trust.

- Structured scorecards and question banks: Hummer AI enforces consistent prompts, follow-ups, and anchored ratings. Panels capture evidence, not impressions. Standardization improves fairness, reduces bias, and turns the candidate evaluation process into comparable data trusted in debriefs and audits.

- Work-sample automation and rubrics: Hummer AI hosts realistic tasks with clear inputs and scoring anchors. It auto-collects artifacts, timestamps, and notes. Consistent candidate assessment raises signal, trims fatigue, and proves fairness for distributed hiring where bandwidth, schedules, and formats vary.

- Bias checks and calibration analytics: The platform shows score distributions across interviewers and stages. Outlier alerts, sample-scoring, and nudges reduce drift. These checks embed candidate evaluation best practices and keep decisions consistent without adding loops or slowing time to fill.

- Evidence-first debriefs: Hummer AI hides votes until notes and ratings are in. Debrief views compare evidence to candidate evaluation criteria, weights, and risks. This sequence reduces anchoring, shortens meetings, and produces defensible decisions that travel into onboarding and 30-60-90 planning.

- Compliance, privacy, and accessibility: Built-in consent logs, PII handling, and accessible tasks keep hiring compliant. Standardized questions and timing support fair candidate evaluation. Exports and audit trails satisfy reviews while accommodations preserve level field without changing difficulty or scoring rules.

- ATS integrations and automation: Two-way ATS sync pushes templates, pulls feedback, and triggers reminders. Hummer AI automates nudges, deadlines, and panel setup, reducing coordinator load. The candidate evaluation process stays on track, with fewer no-shows and faster decision time zones.

- Outcome tracking and iteration: Dashboards connect interview signals with ramp speed, retention, and quality. Leaders see which questions predict outcomes, update weights and training. The loop keeps criteria current, strengthens fairness, and compounds gains each quarter without adding interview loops.

Conclusion

Great hiring isn’t luck, it’s a disciplined candidate evaluation engine that turns interviews into comparable evidence. When teams agree on outcomes, use structured interviews and job-relevant work samples, and debrief evidence-first, the results are predictable: better fit, faster ramp, lower attrition, and stronger long-term performance. A consistent, fair process also elevates candidate experience, strengthens employer brand, and protects decisions with clear audit trails.

Hummer AI makes this rigor practical at scale. It standardizes scorecards and question banks, hosts work samples with anchored rubrics, and enforces independent scoring before debriefs. Bias checks flag rating drift; integrations centralize notes, artifacts, and timelines; analytics tie interview signals to ramp, retention, and quality. Hiring managers get cleaner signals and shorter meetings; candidates get clarity and respect.

The payoff is compounding: every cycle improves your playbook. With Hummer AI, organizations replace guesswork with measurable, fair, and repeatable decisions, so the right people join, thrive, and stay.

FAQs

1. Can AI help improve candidate evaluations?

Yes. AI helps evaluate candidates by turning interviews and work samples into structured data. It can assess skills with calibrated rubrics, support technical assessments, and surface patterns across a large candidate pool. Teams save time, reduce noise, and ensure fair comparisons while highlighting candidate's ability and soft skills without replacing human judgment, in high-volume hiring cycles today.

2. What are the examples of candidate evaluation comments?

Use an evaluation form with concise, evidence-led comments, such as: “Demonstrated strong communication abilities under pressure,” “Technical skills matched specific skills for role,” “Candidate’s body language showed confidence and listening, poise shone,” “Growth mindset evident,” and “Stakeholder alignment clear.” Tie notes to outcomes, artifacts, and risk flags; keep wording neutral and measurable. Avoid bias, cite examples precisely.

3. What is a candidate assessment?

A candidate assessment is a standardized way to assess skills and specific skills through tasks, simulations, or tests. It measures technical skills, problem solving abilities, and candidate's ability with scoring rubrics. Results complement interviews, feeding the evaluation process with comparable evidence, not hunches, to prioritize learning speed and role readiness confidently across varied contexts and teams.

4. What is a good employee evaluation score?

A good score reflects job performance predictors, not perfection. Define anchors per competency, then classify ranges: hire, hold, or decline. Top contenders show consistent fours or fives across work samples, communication, and soft skills; notes address candidate’s body language thoughtfully. Use ranges to shortlist candidates aligned with the existing team and role realities and manager expectations.

5. How do I avoid bias when evaluating candidates?

Use structured questions, anchored ratings, and independent notes before debrief. Blind early signals were lawful, standardized tasks, and reviewed pass rates. Train panels to judge applicants on evidence, candidate’s ability and soft skills, not pedigree. Calibrate quarterly with the existing team. Decide with rubrics; shortlist candidates consistently to keep decisions fair across levels and locations over time.

6. What does candidate evaluation mean in the hiring process?

It’s a structured way to turn interviews, work samples, and references into comparable evidence during the recruitment process. Teams rate competencies with an evaluation form and rubrics, then combine signals for a well rounded view. The goal is deciding fit, forecasting ramp, and reducing risk before extending a job offer, with clear decision ownership and notes.

7. Which candidate evaluation methods work best for recruiters?

Blend structured interviews, realistic work samples, and calibrated technical assessments. Use anchored scoring to assess ability and technical depth consistently. Independent scoring before debrief limits anchoring. Evidence-first debriefs, plus reference checks, help recruiters compare apples to apples and select methods that fit role risk, volume, and timeline for stronger rankings among finalists and clearer trade-offs.

8. What impact does candidate evaluation have on retention and performance?

Strong evaluation links hiring choices to future job performance. Evidence on capability, people skills, and communication abilities supports onboarding plans and smoother collaboration. Managers align expectations early, reduce mismatches, and improve retention. Clear data also guides compensation, promotions, and coaching, turning early signals into durable results across quarters and lowering ramp risks meaningfully for teams.